In Lucene 9.7.0 we added support that leverages SIMD instructions to perform data-parallelization of vector similarity computations. Now we’re pushing this even further with the use of Fused Multiply-Add (FMA).

What is FMA

Multiply and add is a common operation that computes the product of two numbers and adds that product with a third number. These types of operations are performed over and over during vector similarity computations.

Fused multiply-add (FMA) is a single operation that performs both the multiply and add operations in one - the multiplication and addition are said to be “fused” together. FMA is typically faster than a separate multiplication and addition because most CPUs model it as a single instruction.

FMA also produces more accurate results. Separate multiply and add operations on floating-point numbers have two rounds; one for the multiplication, and one for the addition, since they are separate instructions that need to produce separate results. That is effectively,

Whereas FMA has a single rounding, which applies only to the combined result of the multiplication and addition. That is effectively,

Within the FMA instruction the a * b produces an infinite precision intermediate result that is

added with c, before the final result is rounded. This eliminates a single round, when compared to

separate multiply and add operations, which results in more accuracy.

Under the hood

So what has actually changed? In Lucene we have replaced the separate multiply and add operations with a single FMA operation. The scalar variants now use Math::fma, while the Panama vectorized variants use FloatVector::fma.

If we look at the disassembly we can see the effect that this change has had. Previously we saw this kind of code pattern for the Panama vectorized implementation of dot product.

vmovdqu32 zmm0,ZMMWORD PTR [rcx+r10*4+0x10]

vmulps zmm0,zmm0,ZMMWORD PTR [rdx+r10*4+0x10]

vaddps zmm4,zmm4,zmm0The vmovdqu32 instruction loads 512 bits of packed doubleword values from a memory location into

the zmm0 register. The vmulps instruction then multiplies the values in zmm0 with the

corresponding packed values from a memory location, and stores the result in zmm0. Finally, the

vaddps instruction adds the 16 packed single precision floating-point values in zmm0 with the

corresponding values in zmm4, and stores the result in zmm4.

With the change to use FloatVector::fma, we see the following pattern:

vmovdqu32 zmm0,ZMMWORD PTR [rdx+r11*4+0xd0]

vfmadd231ps zmm4,zmm0,ZMMWORD PTR [rcx+r11*4+0xd0]Again, the first instruction is similar to the previous example, where it loads 512 bits of packed

doubleword values from a memory location into the zmm0 register. The vfmadd231ps (this is the

FMA instruction), multiplies the values in zmm0 with the corresponding packed values from a memory

location, adds that intermediate result to the values in zmm4, performs rounding and stores the

resulting 16 packed single precision floating-point values in zmm4.

The vfmadd231ps instruction is doing quite a lot! It’s a clear signal of intent to the CPU about

the nature of the computations that the code is running. Given this, the CPU can make smarter

decisions about how this is done, which typically results in improved performance (and accuracy as

previously described).

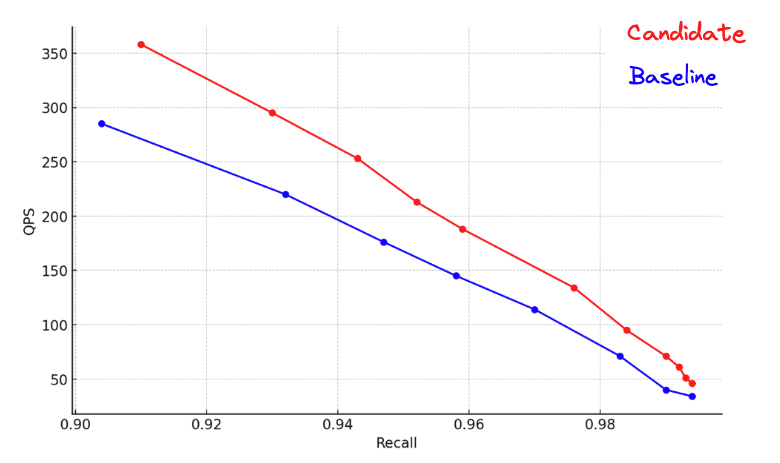

Is it fast

In general, the use of FMA typically results in improved performance. But as always you need to benchmark! Thankfully, Lucene deals with quite a bit of complexity when determining whether to use FMA or not, so you don’t have to. Things like, whether the CPU even has support for FMA, if FMA is enabled in the Java Virtual Machine, and only enabling FMA on architectures that have proven to be faster than separate multiply and add operations. As you can probably tell, this heuristic is not perfect, but goes a long way to making the out-of-the-box experience good. While accuracy is improved with FMA, we see no negative effect on pre-existing similarity computations when FMA is not enabled.

Along with the use of FMA, the suite of vector similarity functions got some (more) love. All of dot product, square, and cosine distance, both the scalar and Panama vectorized variants have been updated. Optimizations have been applied based on the inspection of disassembly and empirical experiments, which have brought improvements that help fill the pipeline keeping the CPU busy; mostly through more consistent and targeted loop unrolling, as well as removal of data dependencies within loops.

It’s not straightforward to put concrete performance improvement numbers on this change, since the effect spans multiple similarity functions and variants, but we see positive throughput improvements, from single digit percentages in floating-point dot product, to higher double digit percentage improvements in cosine. The byte based similarity functions also show similar throughput improvements.

Wrapping Up

In Lucene 9.7.0, we added the ability to enable an alternative faster implementation of the low-level primitive operations used by Vector Search through SIMD instructions. In the upcoming Lucene 9.9.0 we built upon this to leverage faster FMA instructions, as well as to apply optimization techniques more consistently across all the similarity functions. Previous versions of Elasticsearch are already benefiting from SIMD, and the upcoming Elasticsearch 8.12.0 will have the FMA improvements.

Finally, I'd like to call out Lucene PMC member Robert Muir for continuing to make improvements in this area, and for the enjoyable and productive collaboration.